Primer on Knowledge Representations and Acquisition

Primer on Knowledge

Representations and Acquisition

Author: Susanne Lomatch

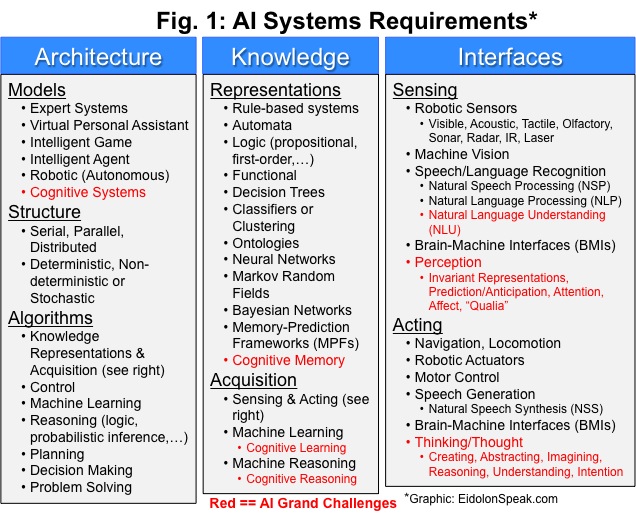

How knowledge is represented is central to an AI or cognitive system, and is used to facilitate sensing, acting, learning, reasoning, planning, decision making, problem solving, perception and thought (see Fig. 1). There are varying definitions for knowledge representations, but the one I prefer is a generalized one based on symbolic and/or structural forms or frameworks:

Knowledge Representations:

Symbolic

Rule-based systems

Automata

Logic (propositional, first-order, )

Functional or Operational

Structural or Hybrid

Decision Trees

Classifiers or Clustering

Ontologies

Neural Networks

Markov Random Fields

Bayesian Networks

Memory-Prediction Frameworks (MPFs)

Cognitive Memory

This list and its taxonomy are my own and do not exhaust the number of possibilities.

Knowledge and information are used interchangeably, though information is notably viewed as the more fundamental generalization with the tenets of information theory forming a rigorous basis. I do not take such narrow view here, and I think that in considering artificial cognitive systems (ACS) one must broaden the view beyond those constructs commonly discussed in the context of information theory (most particularly coding theory) which may prejudice a designer of an ACS to architectures that are more closely associated with non-biologically based systems than that of uniquely biological systems, such as the brain.

The application of the knowledge representations listed above can get more specific, depending on the knowledge domain. For example, natural language knowledge can be represented by symbolic rule-based and corresponding automata models (e.g. the Chomsky hierarchy), or structural neural or Bayesian network models, or both (hybrids). Natural speech is commonly represented by stochastic models, such as Markov random field models. Semantic information is commonly represented by ontologies. An artificial or machine vision system may represent knowledge in a memory-prediction framework, such as hierarchical temporal memory. A cognitive system will represent knowledge in a somewhat more complex cognitive memory model, perhaps incorporating a hybrid of structural and symbolic representations, and based on biologically inspired systems (e.g., the human or mammalian neural functional architecture == brain).

Knowledge acquisition is also central to an AI or cognitive system, and I keep the scope fairly simple:

Knowledge Acquisition:

Sensing & Acting

Machine Learning

Machine Reasoning

Sensing and acting are basic to any AI or cognitive system, and the extent is broad (see list in Fig. 1). I offer a separate primer on machine learning HERE. Machine reasoning is highly dependent on the type of knowledge representation used. For example, with ontologies, the relational structure enables basic embedded reasoning about the entities within the ontological domain. Likewise, logic and functional/operational representations have embedded mathematical reasoning. With neural networks, reasoning is not so straightforward, as the network explicitly encodes information or knowledge (through various machine learning modes), but does not explicitly indicate how that knowledge might be used for reasoning or predictions. Bayesian belief networks both encode information and explicitly include probabilistic reasoning. Memory-prediction frameworks are a type of Bayesian net inspired by the human-mammalian neocortex (see Part 3). Cognitive memories may be a generalization of an MPF to include hetero-associative memories and relational reasoning, important for representing and processing semantic structures and other complexities in natural language and speech.

One additional note on the importance of knowledge representations, in the form of a reiteration of a point originally made by Minsky and Papert: no machine can learn to recognize X unless it possesses, at least potentially, some scheme for representing X. As it turns out, this is just the beginning of the problem. Knowledge representations are integrally tied to learning and reasoning propensities, and in practice, many representations lead to intractable machine learning and reasoning. For the harder problems such as natural language and speech, the goal is to find suitable compositional representations that are symbiotic with tractable and efficient machine learning and reasoning, i.e., fast structural processing, transformations, mapping and inferencing – a challenging problem, given the nonlinear, deep structure nature of natural language and speech.

For those looking for more detail, I have expanded the outline at the top, and have included wiki or external links where possible for even further edification.

Knowledge Representations:

Symbolic

Based on explicit representations of facts about the knowledge domain

Reasoning: Not embedded, must be implemented separately

Automata

Abstract, self-acting mathematical machines

Knowledge/information is encoded in the automaton states and transitions

A mathematical formalization of rule-based systems

Reasoning: Not embedded, must be implemented separately

Logic (propositional, first-order,

)

Knowledge/information is expressed in a mathematical logic formalism

Reasoning: Embedded in the specific axiomatic formalism used

Note: There is a distinction between formal logic and informal logic; the mathematical reasoning derived from formal logic is based on deduction, while reasoning in informal logic can take on various forms, deductive, inductive, abductive, analogical, etc.; informal logic is sometimes termed as critical thinking

Functional or Operational

Knowledge/information is represented in functional or operational form

Reasoning: Can be embedded in the specific functional or operation, but may also be applied separately

Structural or Hybrid

Knowledge/information is represented in a graphical model of decisions and their possible outcomes

Reasoning: Embedded in the possible outcomes, but can also be applied separately to find the optimal outcome (along with machine learning techniques)

Classifiers or Clustering

Classifiers represent labeled knowledge/information in groups based on statistical sampling or quantitative criteria, such as traits and characteristics, i.e., there are supervisory constraints in the grouping, and an inferred relationship between inputs and outputs

Clusters represent unlabeled knowledge/information in groups of data objects according to a cluster model, i.e., in an unsupervised manner, with possible display of hidden or underlying structure

Reasoning: Not embedded, must be implemented separately

Represents knowledge/information as a set of concepts within a domain, and the relationships between those concepts

Many ontologies are graphical-based knowledge representation languages, and some are based on formal logic (see ontology languages)

Specific examples: Semantic networks, description logic

Reasoning: Embedded in the relational structure between entities in the domain

Neural Networks (ANNs)

Structured

and taught to represent knowledge/information in the form of sequences or more

complicated data structures

Network

nodes are artificial neurons

Network

connections are (synaptic) weights encoding the strength of the connection

Each

network node is associated with an activation or transfer function F(w(x)) that

takes as input a set of activation weights for the node's parent variables

(dendrites) and outputs a threshold value (axon) that propagates to the input

of the next layer through a (synaptic) weight function

Acquired

knowledge through training using machine learning techniques is stored in the

pattern of interconnection weights among components – weights are

updated/changed through learning

Specific

examples: Multilayer feedforward (directed acyclic) and recurrent (directed cyclic or undirected) networks

Reasoning:

Not embedded, must be implemented separately

Structured

and taught to represent knowledge/information in the form of sequences or more

complicated data structures

Knowledge/information is represented by a set of random variables having a Markov property described by an undirected graph or network (a.k.a. Markov network)

Network connections or nodal edges represent a probabilistic dependency between variables

Markov networks can represent certain dependencies that a Bayesian network cannot (such as cyclic dependencies); it cannot represent certain dependencies that a Bayesian network can (such as induced dependencies); Markov models are (generally) noncausal, though hybrid approaches can incorporate some causality

Specific examples: Boltzmann machines (stochastic version of Hopfield networks; also a stochastic ANN), hidden Markov random fields

Special cases/hybrid random fields: Markov chains, hidden Markov models (HMMs), conditional random fields, maximum entropy Markov models, deep/sigmoid belief networks

Markov chains are a special hybrid case, representing a sequence of random variables having a Markov property, usually described by a directed graph (cyclic or acyclic); connections are the probability of going from one state to the next, without dependence on states or sequence of states that preceded it; a Markov chain is also a stochastic Markov process implementation of a weighted finite-state automaton

HMMs are sometimes classified as simple dynamic Bayesian networks, and are Markov chains with a noisy observation about the state at each time step added

Reasoning: Embedded probabilistic inferencing: Networks calculate the conditional distribution of a set of nodes given values to another set of nodes by summing over all possible assignments (exact inference); approximate inference can be achieved through various algorithmic approaches, including belief propagation

Hybrid random fields: Representations that use latent variables to propagate information through time can be divided into two classes: tractable models for which there is an efficient procedure for inferring the exact posterior distribution over the latent variables (such as HMMs), and intractable models for which there is no exact and efficient inference procedure, or are NP-hard (such as generalized Bayesian nets, MRFs and associated hybrids)

Structured

and taught to represent knowledge/information in the form of sequences or more

complicated data structures

Knowledge/information

is represented by nodes and connections via a directed acyclic graph

Network

nodes are Bayesian random variables

Network

connections represent probabilistic dependence between variables, with

conditional probabilities encoding the strength of the dependencies

Each

network node is associated with a conditional probability distribution function P(x|Parents(x)) that takes as input a particular set of values for the node's

parent variables and gives the probability of the variable represented by the

node

Acquired

knowledge is stored in the pattern of conditional probabilities, set by a

priori knowledge (nodal evidence) and changed through learning or inferencing

Bayesian

networks/models are causal, and are often referred to as belief networks

Specific

example: Network could represent the probabilistic relationships between diseases

and symptoms; given symptoms, the network can be used to compute the

probabilities of the presence of various diseases

Hybrid

random fields (see above) are special classes of Bayesian nets

Reasoning:

Embedded probabilistic inferencing, specifically evidential reasoning: Networks calculate posterior probabilities

of an event as output, given a priori nodal evidence; probabilistic inferencing in generalized fully Bayesian nets can be

intractable (NP-hard),

requiring approximate inference algorithmic approaches (see HERE

and HERE for a list of approaches)

Bayesian

and Markov nets differ from deterministic, non-stochastic neural nets:

Bayesian/Markov

nets generate a probabilistic output of event occurrences

Network

structure (connectivity, nodes) can change through inferencing

(probabilistic reasoning) and learning

Bayesian

belief nets can represent causal information

Bayesian

and Markov nets are types of stochastic ANNs, and in general they together represent

hybrid random fields

Memory-Prediction Frameworks (MPFs)

Knowledge/information is represented as a bi-directional hierarchy of memories, from which predictions can be made, given sensory inputs

Inspired by the dense hierarchical interconnected structure of the neocortex, modeled to classify inputs, learn sequences of patterns, form a constant pattern or an invariant representation for a sequence, and make specific predictions

Memories are represented auto-associatively: higher levels predict future input by matching partial sequences and projecting their expectations to the lower levels; recall is based upon partial information of stored representations

(As such, MPFs may be suited best for vision systems, but have more limited use for natural language and speech, or for general cognitive intelligence where data structures beyond local sequences are required)

Learning occurs through a mismatch between bottom-up inputs and top-down expectations: newly learned representations get propagated upward through the hierarchy to form a more complete, invariant representation from which future predictions can be made

Specific examples: Hierarchical temporal memory (HTM)

Reasoning: Embedded probabilistic reasoning and inferencing

Cognitive Memory

Knowledge/information is represented as closely as possible by the functional memory and encodings of a cognitive system, such as the human or mammalian neocortex/old brain

Functional memory in general may include associative memory (auto and hetero) and non-associative memory, or alternatively, short-term memory and long-term memory

Encoding schemes in general may include those identified with actual neural encoding schemes found in conjunction with short and long-term functional memory, and have structure beyond local sequences (i.e., constituent structures, long-term dependencies, sparse relational structures, etc.)

(Note I qualify the term encoding since some in the neuroscience community persuasively argue the problems with linking artificial computational encoding schemes with those of the brain, particularly those used in higher-level processing vs. those used in early sensory processing)

Neurocognitive networks are biologically inspired models incorporating the large-scale structure and dynamics of the brain, particularly the processing in the thalamocortical and corticocortical system, nominally aimed at the neural circuit/network scale – some of the efforts within this thrust can be generalized to more algorithmic approaches that would essentially be similar to the HTM model discussed above, or more complex generalizations of it

Readers should note key considerations for artificial cognitive systems (representations and architectures), including some of the more unconventional observations and properties

These representation models will be required for artificial cognitive systems and cognitive architectures that exhibit natural language understanding and other grand challenge functions shown in Fig. 1

The goal is to continually develop cognitive representations and associated architectures as we learn more about the mammalian/human neural structure (functional and physical)

Readers looking for more detail on how some of these representations are used in natural language processing (NLP) and automatic speech recognition (ASR) may want to review the Primer on NLP. (I am also working on a primer on machine vision, which will also include such detail for that application, and plan a future primer on cognitive systems and architectures.) The Primer on Machine Learning may also be of side benefit.

(Disclaimer: This primer is meant to inform. I encourage readers who find factual errors or deficits to contact me (click on contact link below). I also welcome constructive and friendly comments, suggestions and dialogue.)